04. Topic Modeling

Topic Modeling is a very interesting use case for NLP. It happens to be an unsupervised learning problem - where there might not be any clear label or category that applies to each document; instead, each document is treated as a mixture of various topics.

Topic Modeling

Estimating these mixtures and simultaneously identifying the underlying topics is the goal of topic modeling.

Bag-of-Words: Graphical Model

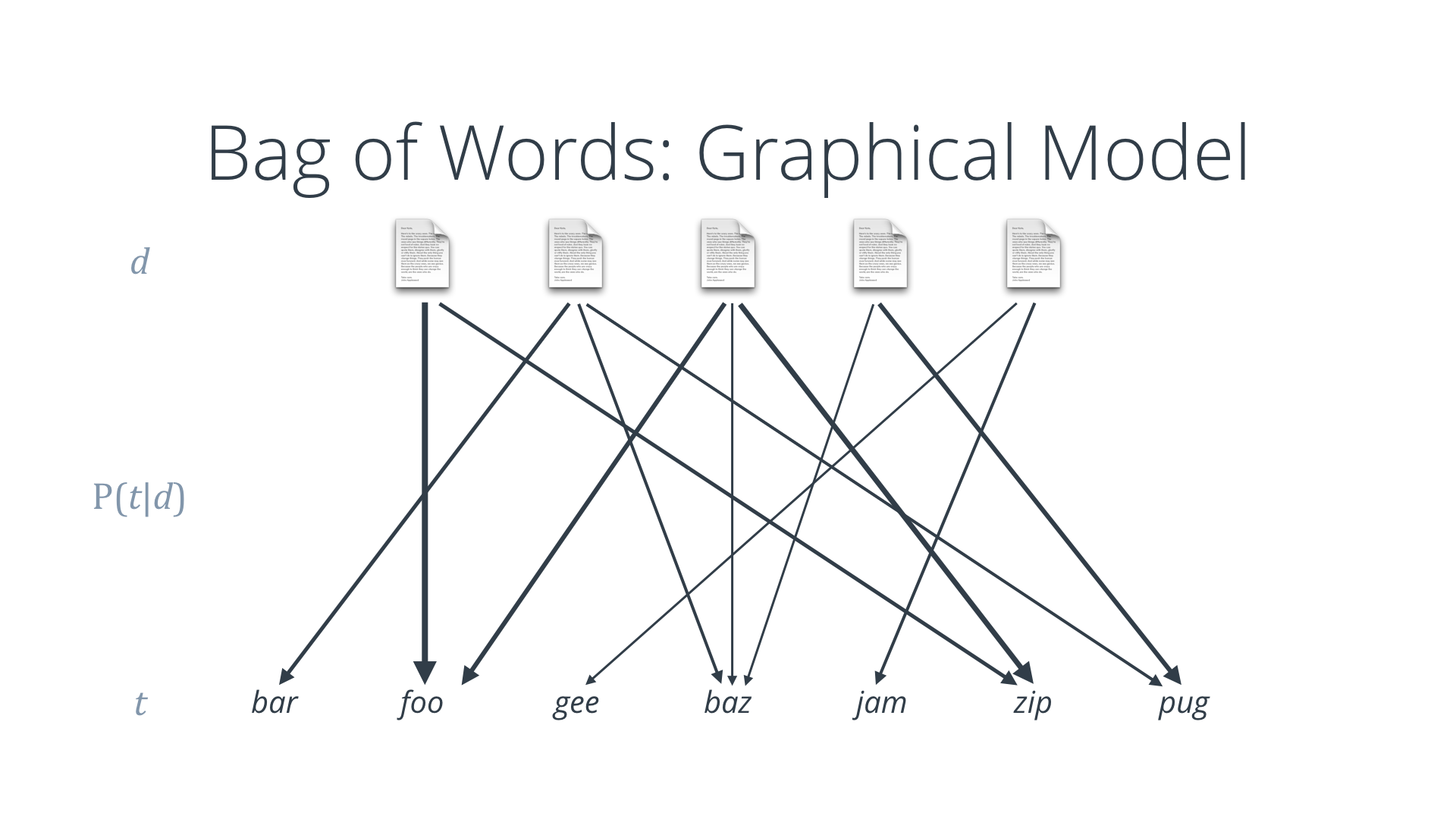

Bag of Words: Graphical Model

Note that all you can observe in documents are the words that make them up. In fact, if you think about the bag-of-words model graphically, it represents the relationship between a set of document objects and a set of word objects.

Specifically, for any given document d and observed term t, it helps answer the question "how likely is it that d generated t".

BoW Model Complexity

QUESTION:

At most how many parameters are needed to define a Bag-of-Words model for 500 documents and 1000 unique words?

SOLUTION:

NOTE: The solutions are expressed in RegEx pattern. Udacity uses these patterns to check the given answer

Latent Variables

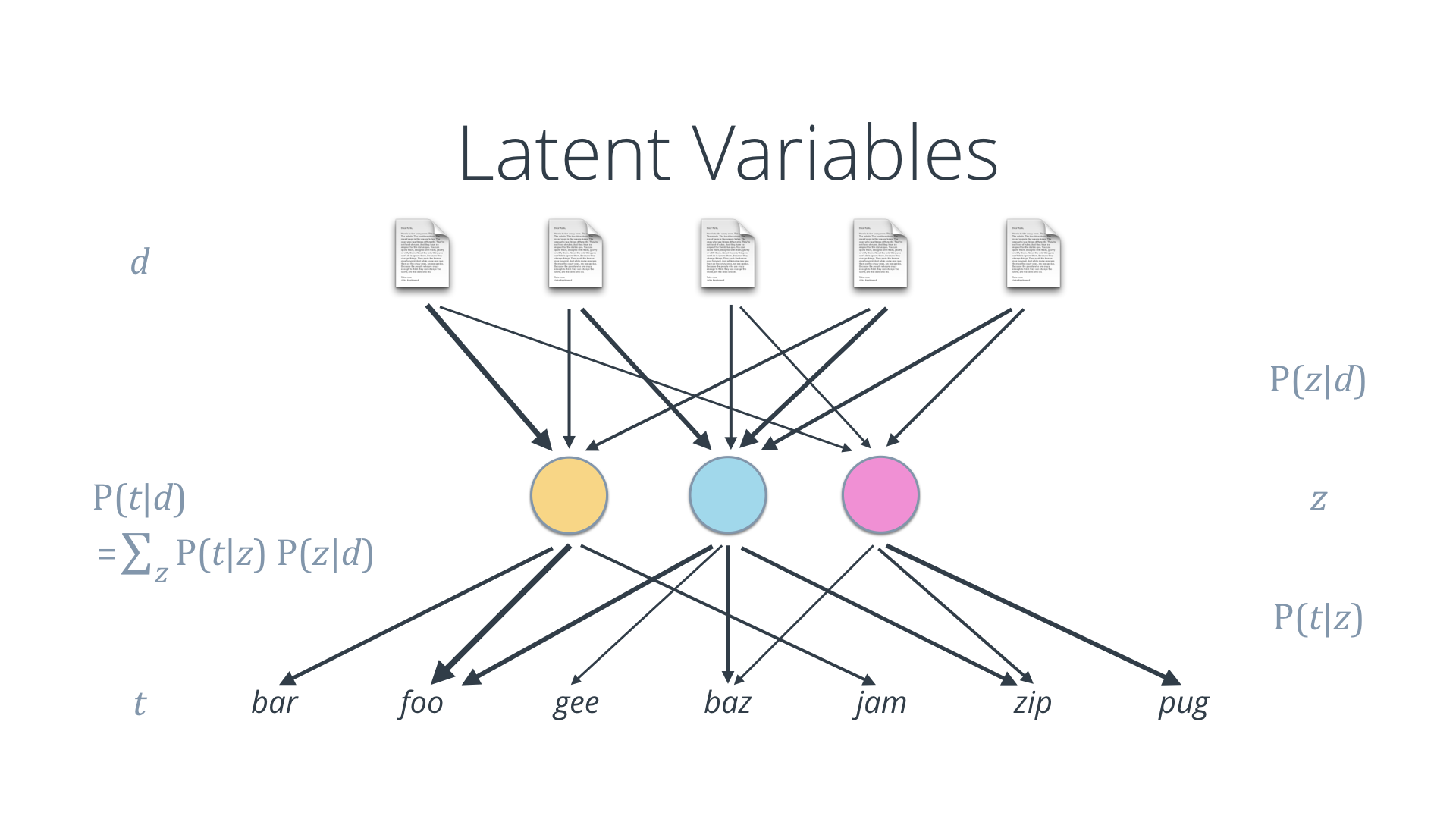

Topic Modeling: Latent Variables

What we wish to add to this model is the notion of a small set of topics, or latent variables, that actually drive the generation of words in each document. Any document is thus considered to have an underlying mixture of topics associated with it. Similarly, a topic is considered to be a mixture of words that it is likely to generate.

There are two sets of parameters or probability distributions that we now need to compute:

- probability of topic z given document d, and,

- probability of term t given topic z.

This effectively helps decompose the document-term matrix, which can get very large if there are a lot of documents with a lot of words, into two much smaller matrices. This technique for topic modeling is known as Latent Dirichlet Allocation (LDA).

LDA Model Complexity

QUESTION:

How many parameters are needed in an LDA topic model for 500 documents and 1000 unique words, assuming 10 underlying topics?

SOLUTION:

NOTE: The solutions are expressed in RegEx pattern. Udacity uses these patterns to check the given answer

Prior Probabilities

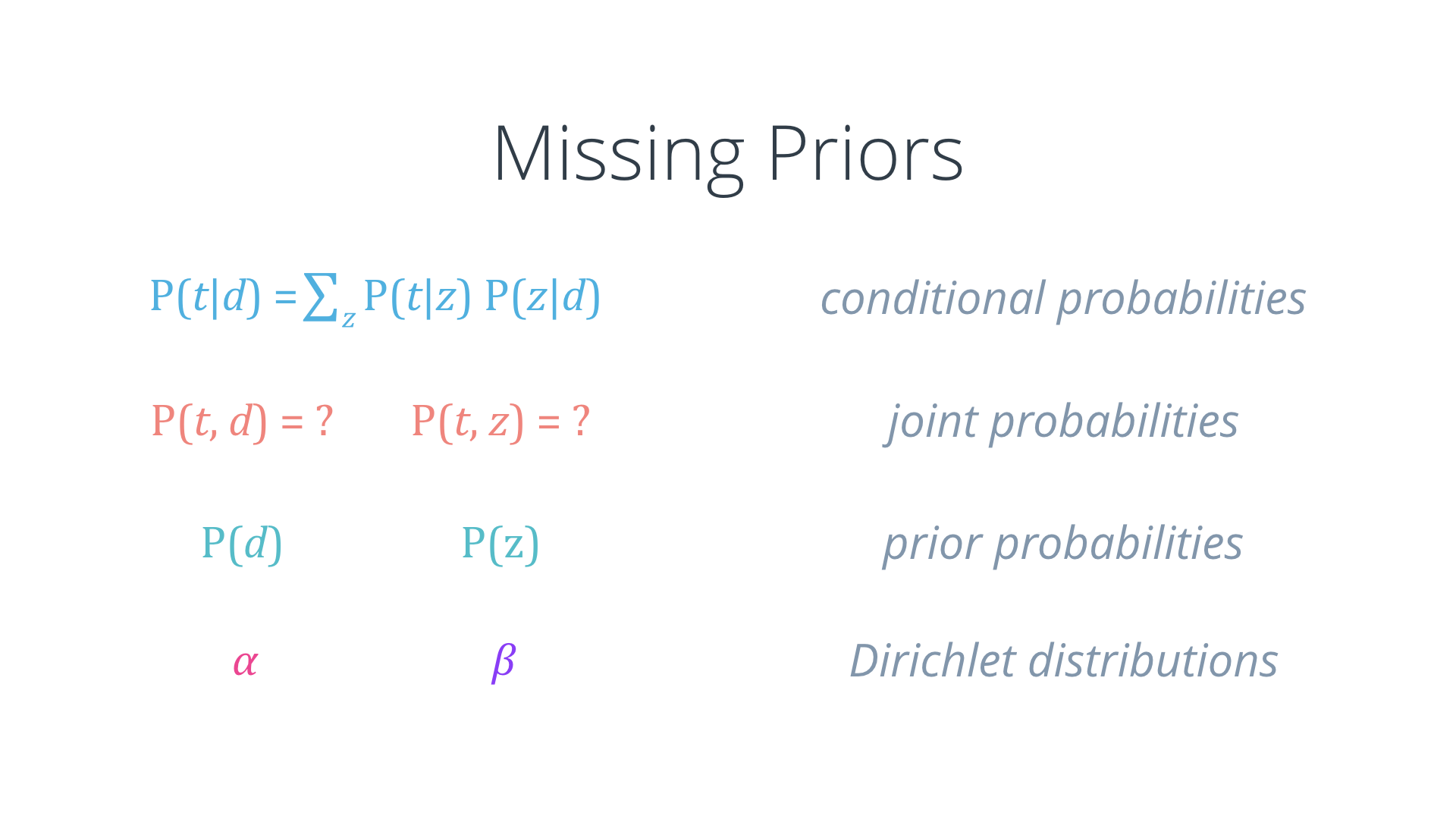

Missing Priors

You may have noticed that till now we’ve only been talking about conditional probabilities. What about the joint probability of a word and document? Or that of a word and topic?

In order to compute that, we need to define some prior probabilities. And this is where “Dirichlet” comes in: We assume that the documents and topics are drawn from Dirichlet distributions.

Note: Dirichlet Distributions

In the context of topic modeling, think of a Dirichlet Distribution as a probability distribution over mixtures.

For example, consider the set of topics: Z = {politics, sports},

and the words (or vocabulary): V = {vote, country, game}.

Let's assume that the topic politics is defined as a mixture of the words in some proportion, say, 0.6 x vote + 0.3 x country + 0.1 x game. Notice that we intentionally made the mixture coefficients sum to one.

This can interpreted as a probability distribution over the space of these words or components such that if you randomly sample a word from the topic politics, you're going to get vote 60% of the time, country 30% of them time, and game the remaining 10% of the time.

Now, where do we get these coefficients \left\langle0.3, 0.6, 0.1\right\rangle from? We can obtain these values by sampling from a Dirichlet Distribution, which by definition produces a vector of values that sum to 1. The parameters of the distribution (say, β) determine whether the mixtures produced are sparser (i.e. few components with high values, while others are low) or more mixed (all components with roughly similar values), and if there is a bias towards any particular components.

If there are k components, then β is a k-dimensional vector \left\langle\beta_1, \beta_2, \ldots, \beta_k\right\rangle, with each element essentially capturing how biased the distribution is toward the corresponding component. Since we have the constraint of the elements summing to 1, these k values must lie in a (k-1)-dimensional simplex.

To understand this better, consider the case of k=3 (i.e. a mixture of 3 components or words define each topic). The resulting 3-dimensional vector β = \left\langle\beta_1, \beta_2, \beta_3\right\rangle must be a point on a 2-dimensional plane as depicted below. This plane, constrained to the range of valid values, forms a triangle which is known as a 2-dimensional simplex.

3-dimensional vector embedded in a 2-dimensional simplex (plane)

So far, we have discussed how each topic z can be defined as a mixture of words, where the mixture coefficients are drawn from a Dirichlet distribution with parameters β. Similarly, we can define each document d as a mixture of topics, and again the coefficients can be drawn from a Dirichlet distribution, with parameters α.

Latent Dirichlet Allocation

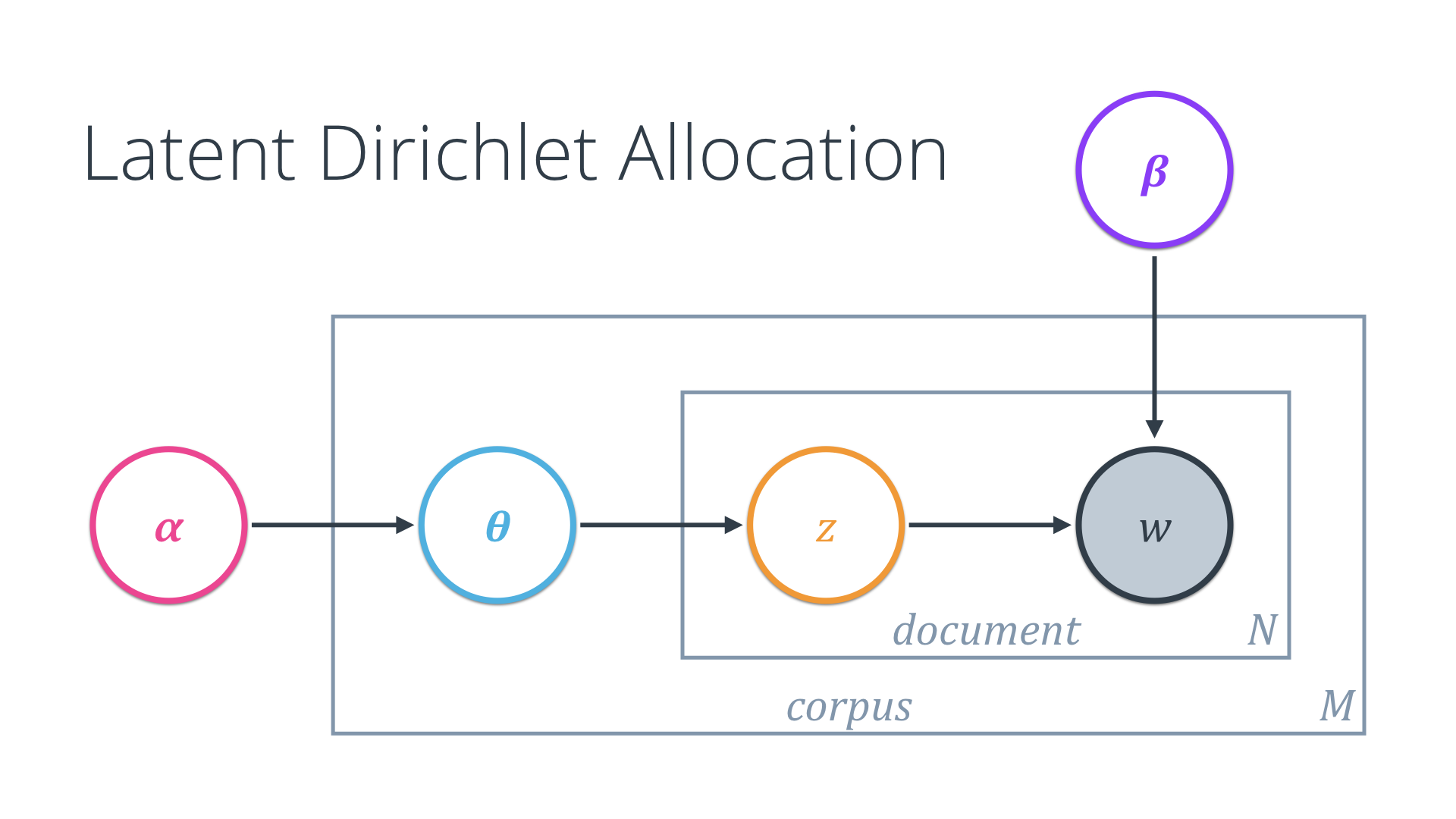

Okay, now we are ready to build a complete picture of the LDA model.

LDA Model: Plate Diagram

- We’ll start with words w, which are the only directly observable variable in this picture. All others are hidden or latent.

- Now consider a document that consists of N words. These words are generated by the mixture of topics z that are allocated to that document.

- There are M such documents in the corpus. Now, instead of treating each document as being an independent object d, let us assume that it is defined by a set of parameters or coefficients (θ). These parameters in turn generate the topic allocations.

- Finally, we introduce the priors: Assume that the θ vectors are drawn from a Dirichlet distribution with parameters α. And similarly, let us define another Dirichlet distribution with parameters β, that captures topic-word prior probabilities.

This visualization, also known as a plate diagram, captures the relationships between the different random variables in the LDA model.

LDA: Use Cases

Topic modeling, document categorization

The prime use case for LDA is topic modeling: Given a corpus of documents, find out the underlying topics and their composition in each document. This can help organize documents into more meaningful categories.

Mixture of topics in a new document: P(z | w, α, β)

Also, given a new document with words w, we can compute the mixture of topics z that characterize it.

Generate collections of words with desired mixture

And being a generative model, LDA allows us to generate collections of words based on the model parameters.

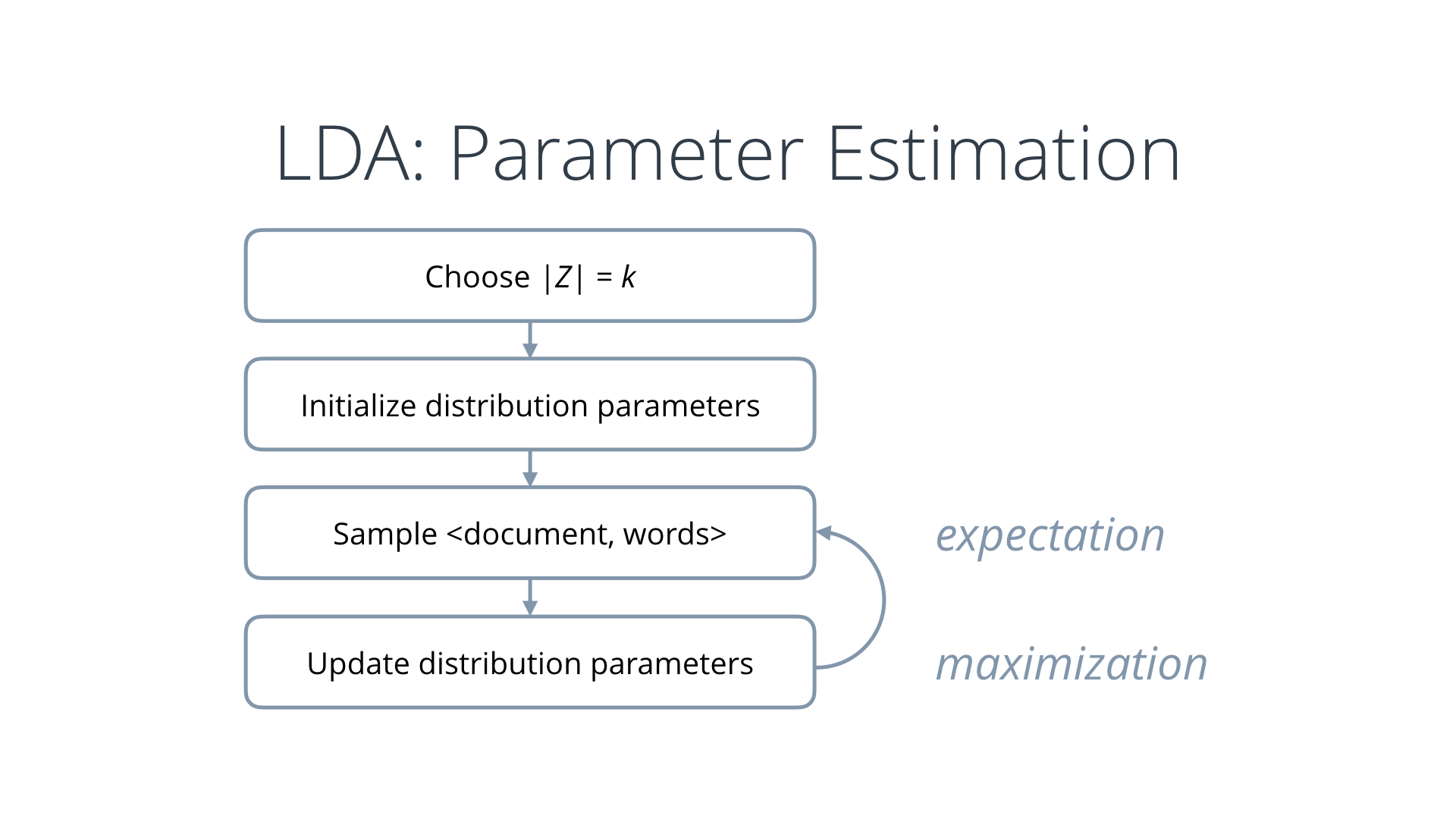

LDA: Parameter Estimation

Recall that all we have available is a bunch of documents, each made up of a collection of words. So, how do we go about computing these parameters when we don’t even know what the topics are, or what documents relate to what topics? This is a classic unsupervised learning problem!

These quantities must be estimated simultaneously. Here’s the basic idea:

- Start by choosing a desired number of topics k; this can be an educated guess based on your knowledge of the corpus, or just the number of topics you want to extract. Also initialize the probability distribution parameters with random values.

- Now using these distributions, sample a document, and some words from that document.

- Update the distribution parameters to maximize the likelihood that the these distributions would generate the actual words observed in the document.

- Repeat this over all available documents, and iterate for multiple passes, until the distributions converge, i.e. stop changing much.

The exact algorithm is more involved, but at a high level, this is an Expectation-Maximization approach, similar to finding k-means clusters.